Developer of AI/ML methods and tools

Santa Fe, New Mexico 875401, USA

Cell: +1 (505) 473 4150

Email: velimir.vesselinov@gmail.com, monty@envitrace.com

Web: monty.gitlab.io, envitrace.com

My expertise is in applied mathematics, computer science, environmental management and engineering.

My research interests are in the general areas of machine learning (ML), artificial intelligence (AI), data analytics, model diagnostics, and cutting-edge computing (including high-performance cloud, quantum, edge).

I am the inventor and lead developer of a series of novel theoretical methods and computational tools for ML/AI. I am also a co-inventor of a series of patented ML/AI methodologies.

Over the years, I have been the principal investigator of numerous projects. These projects addressed various Earth-sciences problems, including geothermal, carbon sequestration/storage, oil/gas production, climate/anthropogenic impacts, wildfires, environmental management, water supply/contamination watershed hydrology, induced seismicity, and waste disposal. Work under these projects included various tasks such as ML, AI, data analytics, statistical analyses, model development, model analyses, uncertainty quantification, sensitivity analyses, risk assessment, and decision support.

Currently, I am developing my own private business with Trais Kliphuis called EnviTrace LLC. We are developing novel AI/ML methods and software tools for environmental, climate, and energy problems. Special focus are geothermal and groundwater contamination problems.

My Ph.D. (University of Arizona, 2000) is in Hydrology and Water Resource Engineering with a minor in Applied Mathematics (adviser Regents Professor Shlomo P. Neuman). I joined LANL as a postdoc in 2000 and have been a staff member since 2001.

At LANL, I have been involved in numerous projects related to computational earth sciences, big-data analytics, modeling, model diagnostics, high-performance computing, quantum computing, and machine learning. I have authored book chapters and more than 130 research papers cited more than 2,400 times with h-index 26 (Google Scholar).

For my research work, I received a series of awards. In 2019, I was inducted into the Los Alamos National Laboratory’s Innovation Society.

I am also the lead developer of a series of groundbreaking open-source codes for machine learning, data analytics, and model diagnostic. The codes are actively used worldwide by the community. They are available on GitHub and GitLab.

One of the codes is SmartTensors: a general framework for Unsupervised, Supervised, and Physics-Informed Machine ML/AI. In 2021, SmartTensors has received two R&D100 awards.

Another code is MADS: an integrated high-performance computational framework for data analytics and model diagnostics. MADS has been integrated in SmartTensors to perform model calibration (history matching), uncertainty quantification, sensitivity analysis, risk assessment and decision analysis based on the SmartTensors ML/AI predictions.

2022 - present: Co-Founder, CTO, CSO, EnviTrace LLC, Santa Fe, New Mexcio, USA

2022 - present: Founder, CEO, SmartTensors LLC, Santa Fe, New Mexcio, USA

2000 - 2022: Senior Scientist, Los Alamos National Laboratory, Los Alamos, New Mexcio, USA

1995 - 2000: Research Associate, Department of Hydrology and Water Resources, University of Arizona, Tucson, Arizona, USA

1989 - 1995: Associate Professor, Department of Hydrogeology and Engineering Geology, Institute of Mining and Geology, Sofia, Bulgaria

Ph.D., 2000

Department of

Hydrology and Water Resources, University of Arizona, Tucson, Arizona,

USA

Major: Engineering Hydrology

Minor: Applied Mathematics

Dissertation title: Numerical inverse interpretation of pneumatic tests in

unsaturated fractured tuffs at the Apache Leap Research Site

Advisor: Regents Professor Dr. Shlomo P. Neuman

M.Eng., 1989

Department of Hydrogeology and Engineering Geology, Institute of Mining and

Geology, Sofia, Bulgaria

Major: Hydrogeology

Minor: Engineering Geology

Dissertation title: Hydrogeological investigation in applying the Vyredox method

for groundwater decontamination

Advisor: Professor Dr. Pavel P. Pentchev

More publications are available at Google Scholar, ResearchGate, and Academia.edu.

More presentations are available at SlideShare.net, ResearchGate, and Academia.edu.

More videos are available on my ML YouTube channel.

More reports are available at the LANL electronic public reading room

Over the years, I have been the principal investigator or principal co-investigator of a series of multi-institutional/multi-million/multi-year projects funded by LANL, LDRD, DOE, ARPA E, and industry partners:

In addition to my scientific pursuits, I have always been interested in arts.

My artwork includes drawings, photography, and acrylic paintings.

Unsupervised Machine Learning (ML) methods are powerful data-analytics tools capable of extracting important features hidden (latent) in large datasets without any prior information. The physical interpretation of the extracted features is done a posteriori by subject-matter experts.

In contrast, supervised ML methods are trained based on large labeled datasets The labeling is performed a priori by subject-matter experts. The process of deep ML commonly includes both unsupervised and supervised techniques LeCun, Bengio, and Hinton 2015 where unsupervised Machine Learning are applied to facilitate the process of data labeling.

The integration of large datasets, powerful computational capabilities, and affordable data storage has resulted in the widespread use of ML/AI in science, technology, and industry.

Recently, we have developed a novel unsupervised and physics-informed ML methods. The methods utilize Matrix/Tensor Decomposition (Factorization) coupled with physics, sparsity and nonnegativity constraints. The methods are capable to reveal the temporal and spatial footprints of the extracted features.

SmartTensors is a general framework for Unsupervised and Physics-Informed Machine Learning and Artificial Intelligence (ML/AI).

SmartTensors incorporates a novel unsupervised ML based on tensor decomposition coupled with physics, sparsity and nonnegativity constraints.

SmartTensors has been applied to extract the temporal and spatial footprints of the features in multi-dimensional datasets in the form of multi-way arrays or tensors.

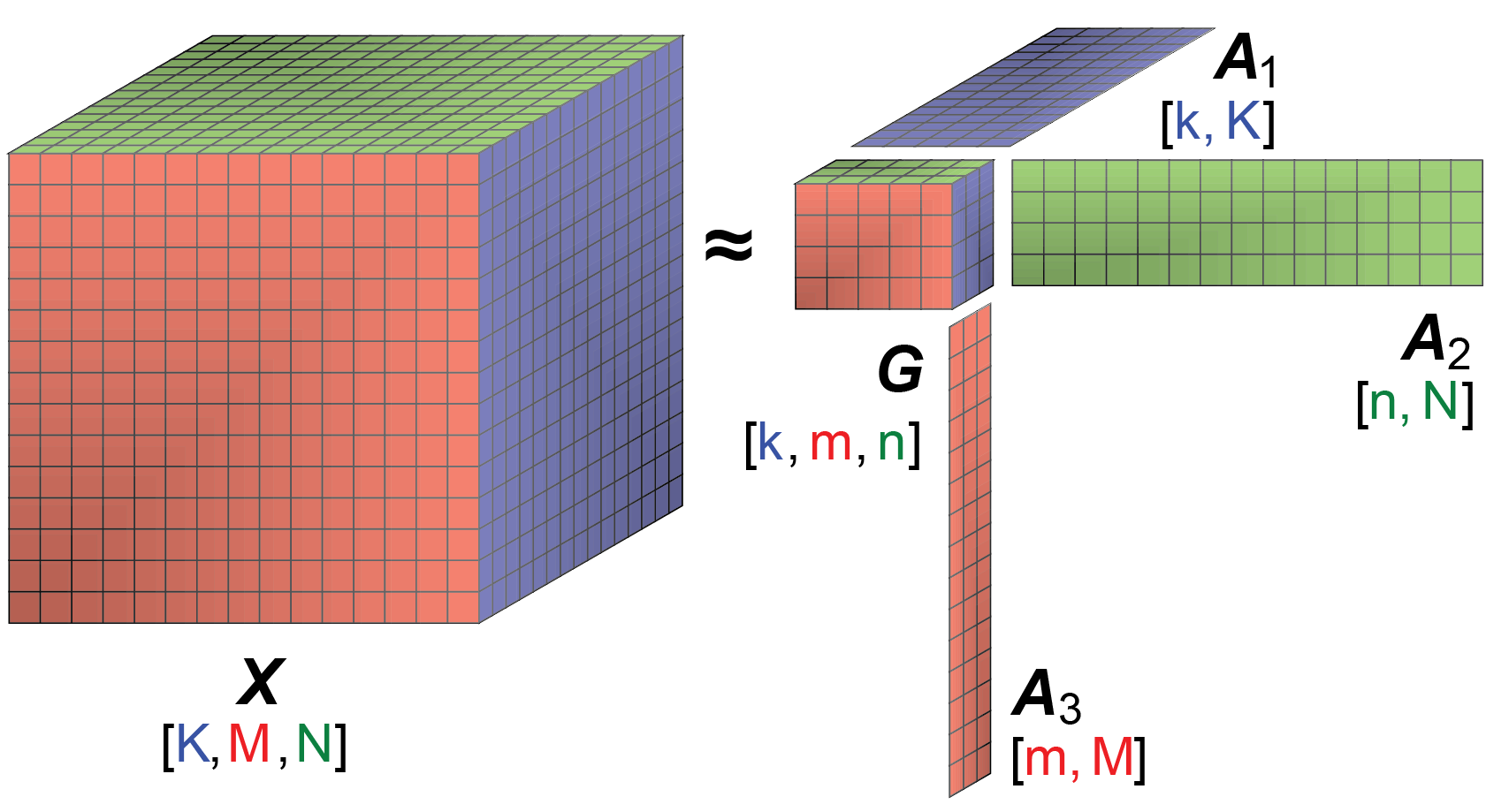

The decomposition (factorization) of a given tensor \(X\) is typically performed by minimization of the Frobenius norm:

$$ \frac{1}{2} ||X-G \otimes_1 A_1 \otimes_2 A_2 \dots \otimes_n A_n ||_F^2 $$

where:

The product \(G \otimes_1 A_1 \otimes_2 A_2 \dots \otimes_n A_n\) is an estimate of \(X\) (\(X_{est}\)).

The reconstruction error \(X - X_{est}\) is expected to be random uncorrelated noise.

\(G\) is a \(n\)-dimensional tensor with a size and a rank lower than the size and the rank of \(X\). The size of tensor \(G\) defines the number of extracted features (signals) in each of the tensor dimensions.

The factor matrices \(A_1,A_2,\dots,A_n\) represent the extracted features (signals) in each of the tensor dimensions. The number of matrix columns equals the number of features in the respective tensor dimensions (if there is only 1 column, the particular factor is a vector). The number of matrix rows in each factor (matrix) \(A_i\) equals the size of tensor X in the respective dimensions.

The elements of tensor \(G\) define how the features along each dimension (\(A_1,A_2,\dots,A_n\)) are mixed to represent the original tensor \(X\).

The tensor decomposition is commonly performed using Candecomp/Parafac (CP) or Tucker decomposition models.

Some of the decomposition models can theoretically lead to unique solutions under specific, albeit rarely satisfied, noiseless conditions. When these conditions are not satisfied, additional minimization constraints can assist the factorization.

A popular approach is to add sparsity and nonnegative constraints. Sparsity constraints on the elements of G reduce the number of features and their mixing (by having as many zero entries as possible). Nonnegativity enforces parts-based representation of the original data which also allows the tensor decomposition results for \(G\) and \(A_1,A_2,\dots,A_n\) to be easily interrelated Cichocki et al, 2009.

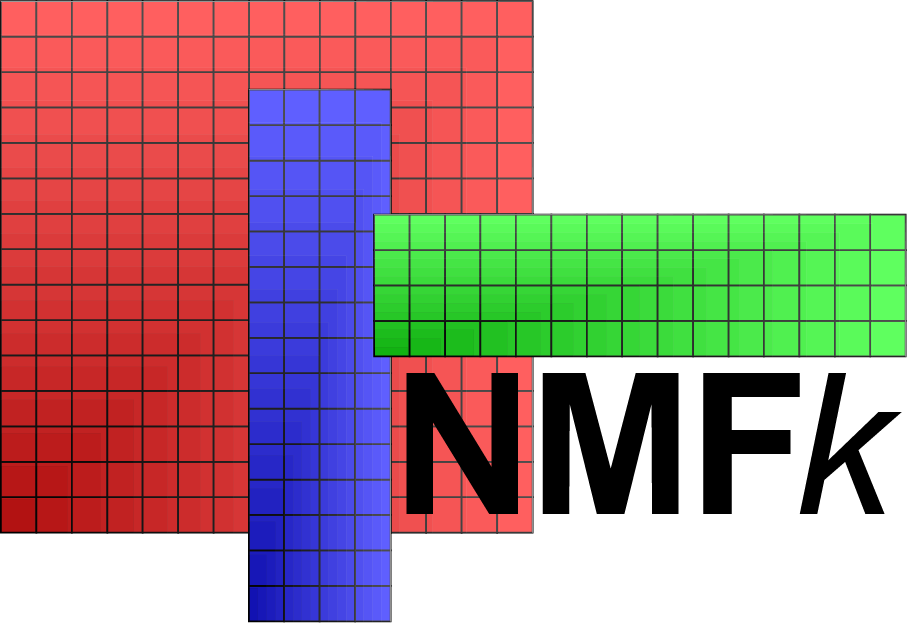

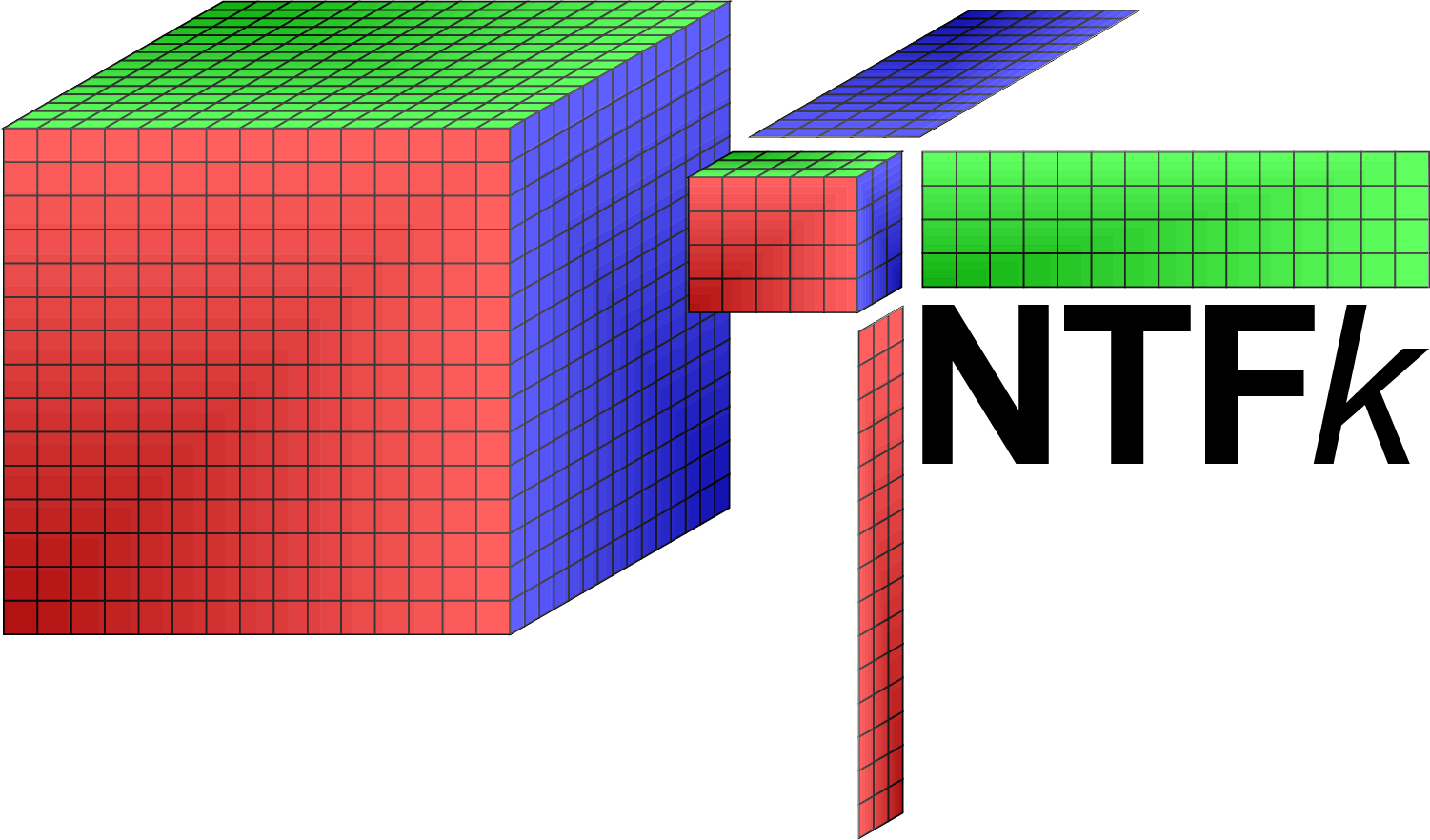

SmartTensors algorithms called NMFk and NTFk for Matrix/Tensor Factorization (Decomposition) coupled with sparsity and nonnegativity constraints custom k-means clustering has been developed in Julia

SmartTensors codes are available as open source on GitHub.

Other key methods/tools for ML include:

Presentations are also available at slideshare.net

Data analytics work executed under most of the projects and practical applications has been performed using the wide range of novel theoretical methods and computational tools developed over the years.

Key tools for data analytics include:

Model diagnostics work executed under most of the projects and practical applications has been performed using the wide range of novel theoretical methods and computational tools developed over the years.

A key tool for model diagnostics is the MADS (Model Analysis & Decision Support) framework. MADS is an integrated open-source high-performance computational (HPC) framework.

MADS can execute a wide range of data- and model-based analyses:

MADS has been tested to perform HPC simulations on a wide-range multi-processor clusters and parallel environments (Moab, Slurm, etc.).

MADS utilizes adaptive rules and techniques which allows the analyses to be performed with a minimum user input.

MADS provides a series of alternative algorithms to execute each type of data- and model-based analyses.

MADS can be externally coupled with any existing simulator through integrated modules that generate input files required by the simulator and parse output files generated by the simulator using a set of template and instruction files.

MADS also provides internally coupling with a series of built-in analytical simulators of groundwater flow and contaminant transport in aquifers.

MADS has been successfully applied to perform various model analyses related to environmental management of contamination sites. Examples include solutions of source identification problems, quantification of uncertainty, model calibration, and optimization of monitoring networks.

MADS current stable version has been actively updated.

Professional softwares/codes with somewhat similar but not equivalent capabilities are:

MADS source code and example input/output files are available at the MADS website.

MADS documentation is available at github and gilab.

The C version of the MADS code is also available: MADS C website and MADS C source . A Python interface for MADS is under development: Python

Other key tools for model diagnostics include:

SmartTensors is a general framework for Unsupervised and Physics-Informed Machine Learning (ML) using Nonnegative Matrix/Tensor decomposition algorithms.

NMFk/NTFk (Nonnegative Matrix Factorization/Nonnegative Tensor Factorization) are two of the codes within the SmartTensors perform.

Unsupervised ML methods can be applied for feature extraction, blind source separation, model diagnostics, detection of disruptions and anomalies, image recognition, discovery of unknown dependencies and phenomena represented in datasets as well as development of physics and reduced-order models representing the data. A series of novel unsupervised ML methods based on matrix and tensor factorizations, called NMFk and NTFk have been developed allowing for objective, unbiased, data analyses to extract essential features hidden in data. The methodology is capable of identifying the unknown number of features charactering the analyzed datasets, as well as the spatial footprints and temporal signatures of the features in the explored domain.

SmartTensors algorithms are written in Julia.

SmartTensors codes are available as open-source on GitHub

SmartTensors can utilize various external compuiting platforms, including Flux.jl, TensorFlow, PyTorch, MXNet, and MatLab

SmartTensors is currently funded by DOE for commercial deployment (with JuliaComputing) through the Technology Commercialization Fund (TCF).

MADS (Model Analysis & Decision Support) is an integrated open-source high-performance computational (HPC) framework.

MADS can execute a wide range of data- and model-based analyses:

MADS has been tested to perform HPC simulations on a wide-range multi-processor clusters and cloud parallel environments (Moab, Slurm, etc.).

MADS utilizes adaptive rules and techniques which allows the analyses to be performed with a minimum user input.

MADS provides a series of alternative algorithms to execute each type of data- and model-based analyses.

MADS can be externally coupled with any existing simulator through integrated modules that generate input files required by the simulator and parse output files generated by the simulator using a set of template and instruction files.

MADS also provides internally coupling with a series of built-in analytical simulators of groundwater flow and contaminant transport in aquifers.

MADS has been successfully applied to perform various model analyses related to environmental management of contamination sites. Examples include solutions of source identification problems, quantification of uncertainty, model calibration, and optimization of monitoring networks.

MADS current stable version has been actively updated.

Codes with somewhat similar but not equivalent capabilities are:

MADS source code and example input/output files are available at the MADS website.

MADS documentation is available at github and gilab.

MADS old sites: LANL, LANL C, LANL Julia, LANL Python

WELLS is a code simulating drawdowns caused by multiple pumping/injecting wells using analytical solutions. WELLS has a C and Julia language versions.

WELLS can represent pumping in confined, unconfined, and leaky aquifers.

WELLS applies the principle of superposition to account for transients in the pumping regime and multiple sources (pumping wells).

WELLS can apply a temporal trend of water-level change to account for non-pumping influences (e.g. recharge trend).

WELLS can account early time behavior by using exponential functions (transmissivities and storativities; Harp and Vesselinov, 2013).

WELLS analytical solutions include:

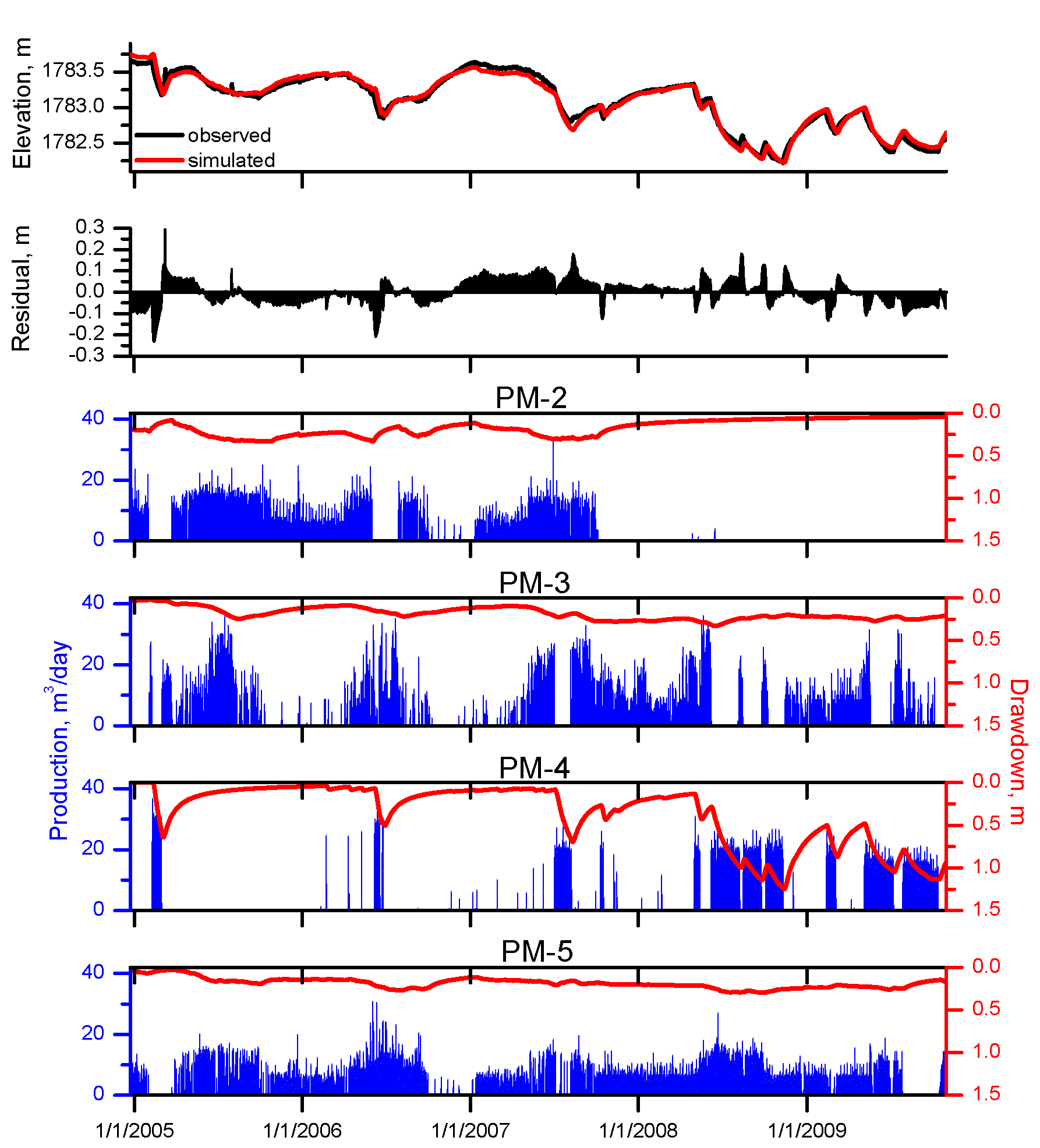

WELLS has been applied to decompose transient water-supply pumping influences in observed water levels at the LANL site (Harp and Vesselinov, 2010a).

For example, the figure below shows WELLS simulated drawdowns caused by pumping of PM-2, PM-3, PM-4 and PM-5 on water levels observed at R-15.

The mode inversion of the WELLS model predictions is achieved using the code MADS.

Codes with similar capabilities are AquiferTest. AquiferWin32, Aqtesolv, MLU, and WTAQ.

WELLS source code, example input/output files, and a manual are available at the WELLS websites: LANL GitLab Julia GitHub

LA-CC-10-019, LA-CC-11-098